| Actual condition | |||

|---|---|---|---|

| Infected | Not infected | ||

| Test result | Test shows "infected" | True Positive | False Positive (i.e. infection reported but not present) Type I error |

| Test shows "not infected" | False Negative (i.e. infection not detected) Type II error | True Negative | |

positive 和 negative 指类别

true 和 false 指分类正确与否

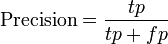

即检测到的正样本中有多少比例是正确的

即检测到的正样本中有多少比例是正确的

False positive rate

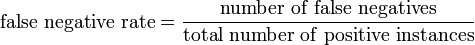

The false positive rate is the proportion of negative instances that were erroneously reported as being positive.False negative rate

The false negative rate is the proportion of positive instances that were erroneously reported as negative.precision - recall

二者都有trade-off

ROC 的纵坐标和PR 的横坐标是一样的。

Receiver operating characteristic ROCCan increase recall by retrieving more, This can decrease precision.

AP average precision:

考虑到了 retrieved documents 的 ranking

The precision and recall are based on the whole list of documents returned by the system. Average precision emphasizes returning more relevant documents earlier. It is average of precisions computed after truncating the list after each of the relevant documents in turn:

where r is the rank, N the number retrieved, rel() a binary function on the relevance of a given rank, and P() precision at a given cut-off rank.

MAP: mean AP 即很多类 retrieval 的AP的平均

F measure

beta 值越大,越侧重于 precison

常用的有 F1, F2, F0.5

0 comments:

Post a Comment